Xilinx

Содержание:

Programming with Vitis AI

Vitis AI offers a unified set of high-level C++/Python programming APIs to run AI applications across edge-to-cloud platforms, including DPU for Alveo, and DPU for Zynq Ultrascale+ MPSoC and Zynq-7000. It brings the benefits to easily port AI applications from cloud to edge and vice versa. 7 samples in VART Samples are available to help you get familiar with the unfied programming APIs.

| ID | Example Name | Models | Framework | Notes |

|---|---|---|---|---|

| 1 | resnet50 | ResNet50 | Caffe | Image classification with VART C++ APIs. |

| 2 | resnet50_mt_py | ResNet50 | TensorFlow | Multi-threading image classification with VART Python APIs. |

| 3 | inception_v1_mt_py | Inception-v1 | TensorFlow | Multi-threading image classification with VART Python APIs. |

| 4 | pose_detection | SSD, Pose detection | Caffe | Pose detection with VART C++ APIs. |

| 5 | video_analysis | SSD | Caffe | Traffic detection with VART C++ APIs. |

| 6 | adas_detection | YOLO-v3 | Caffe | ADAS detection with VART C++ APIs. |

| 7 | segmentation | FPN | Caffe | Semantic segmentation with VART C++ APIs. |

Introduction

CHaiDNN is a Xilinx Deep Neural Network library for acceleration of deep neural networks on Xilinx UltraScale MPSoCs. It is designed for maximum compute efficiency at 6-bit integer data type. It also supports 8-bit integer data type.

The design goal of CHaiDNN is to achieve best accuracy with maximum performance. The inference on CHaiDNN works in fixed point domain for better performance. All the feature maps and trained parameters are converted from single precision to fixed point based on the precision parameters specified by the user. The precision parameters can vary a lot depending upon the network, datasets, or even across layers in the same network. Accuracy of a network depends on the precision parameters used to represent the feature maps and trained parameters. Well-crafted precision parameters are expected to give accuracy similar to accuracy obtained from a single precision model.

Продукция

Разновидности ПЛИС — микросхемы FPGA (Field Programmable Gate Array), перепрограммируемые микросхемы с традиционной архитектурой PAL (Complex Programmable Logic Devices, или CPLD), — а также средства их проектирования и отладки, выпускаемые фирмой Xilinx, используются в устройствах цифровой обработкой информации — например, в системах телекоммуникации и связи, вычислительной технике, периферийном и тестовом оборудовании, электробытовых приборах. Фирма производит микросхемы в различных типах корпусов и в нескольких исполнениях, включая индустриальное, военное и радиационно-стойкое.

Семейство ПЛИС Zynq UltraScale+RFSoCs компании Xilinx содержат до 16 быстродействующих АЦП и ЦАП, интегрированных в их структуру.

Getting Started

Two options are available for installing the containers with the Vitis AI tools and resources.

- Build containers locally with Docker recipes: Docker Recipes

Installation

-

Install Docker — if Docker not installed on your machine yet

-

Clone the Vitis-AI repository to obtain the examples, reference code, and scripts.

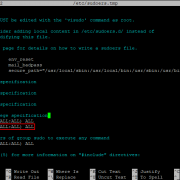

git clone --recurse-submodules https://github.com/Xilinx/Vitis-AI cd Vitis-AI

Using Pre-build Docker

Download the latest Vitis AI Docker with the following command. This container runs on CPU.

To run the docker, use command:

Building Docker from Recipe

There are two types of docker recipes provided — CPU recipe and GPU recipe. If you have a compatible nVidia graphics card with CUDA support, you could use GPU recipe; otherwise you could use CPU recipe.

CPU Docker

Use below commands to build the CPU docker:

To run the CPU docker, use command:

GPU Docker

Use below commands to build the GPU docker:

To run the GPU docker, use command:

Please use the file ./docker_run.sh as a reference for the docker launching scripts, you could make necessary modification to it according to your needs.

More Detail can be found here: Run Docker Container

Advanced — X11 Support for Examples on Alveo

Some examples in VART and Vitis-AI-Library for Alveo card need X11 support to display images, this requires you have X11 server support at your terminal and you need to make some modifications to **./docker_run.sh** file to enable the image display. For example, you could use following script to start the Vitis-AI CPU docker for Alveo with X11 support.

Before run this script, please make sure either you have local X11 server running if you are using Windows based ssh terminal to connect to remote server, or you have run xhost + command at a command terminal if you are using Linux with Desktop. Also if you are using ssh to connect to the remote server, remember to enable X11 Forwarding option either with Windows ssh tools setting or with -X options in ssh command line.

- VART

- Vitis AI Library

- Alveo U200/U250

- Vitis AI DNNDK samples

История

Компанию основали инженеры-предприниматели Берни Вондершмит (—), Джим Барнетт и Росс Фримен (—) — изобретатель концепции базового матричного кристалла, программируемого пользователем (Field Programmable Gate Array, или FPGA). Помимо всех преимуществ стандартных БМК, при использовании FPGA разработчик электронных устройств получал возможность реконфигурации кристалла на рабочем месте. Это давало принципиально новые средства коррекции ошибок и существенно сократило время выхода новых разработок на рынок готовой продукции.

Существовавшие в то время БМК программировались по спецификации заказчика непосредственно заводом-изготовителем ИМС. Предложение Фримена не было востребовано крупными производителями: «оно требовало много транзисторов, а в те годы транзисторы шли на вес золота». Вондершмит, ранее менеджер полупроводникового производства RCA, был убеждён в неэффективности компаний, управлявших собственными, громоздкими производствами. Он предложил Фримену использовать новую в то время модель «бизнеса без заводов» (fabless company), в котором все производственные функции отдавались бы на субподряд независимым заводам. Xilinx работает по этой схеме по сей день.

Xilinx выпустил первую продукцию — программируемую пользователем матрицу XC2064 в . В , после смерти Фримена, компания стала публичной. В следующем, , году Xilinx выпустил семейство программируемой логики XC4000, ставшее, по сути, первым массово применявшимся FPGA.

How to Download the Repository

To get a local copy of the CHaiDNN repository, configure git-lfs and then, clone this repository to the local system with the following command:

git clone https://github.com/Xilinx/CHaiDNN.git CHaiDNN

Where is the name of the directory where the repository will be stored on the local system. This command needs to be executed only once to retrieve the latest version of the CHaiDNN library.

GitHub Repository Structure

CHaiDNN/

|

|-- CONTRIBUTING.md

|-- LICENSE

|-- README.md

|-- SD_Card

| |-- lib

| |-- cblas

| |-- images

| |-- opencv

| |-- protobuf

| |-- zcu102

| `-- zcu104

|-- design

| |-- build

| |-- conv

| |-- deconv

| |-- pool

| `-- wrapper

|-- docs

| |-- API.md

| |-- BUILD_USING_SDX_GUI.md

| |-- CONFIGURABLE_PARAMS.md

| |-- CUSTOM_PLATFORM_GEN.md

| |-- HW_SW_PARTITIONING.md

| |-- MODELZOO.md

| |-- PERFORMANCE_SNAPSHOT.md

| |-- QUANTIZATION.md

| |-- RUN_NEW_NETWORK.md

| |-- SOFTWARE_LAYER_PLUGIN.md

| |-- SUPPORTED_LAYERS.md

| `-- images

|-- software

| |-- bufmgmt

| |-- checkers

| |-- common

| |-- custom

| |-- example

| |-- imageread

| |-- include

| |-- init

| |-- interface

| |-- scheduler

| |-- scripts

| |-- swkernels

| `-- xtract

`-- tools

|-- SETUP_TOOLS.md

`-- tools.zip

Run Inference

Using Pre-built binaries

To run inference on example networks, follow these steps:

-

Place the downloaded and unzipped contents at «» directory. Create directory if not present already.

-

Copy the required contents of «» folder into a SD-Card.

- opencv

- protobuf

- cblas

- images

- bit-stream, boot loader, lib & executables (either from or )

-

Insert the SD-Card and power ON the board.

-

Attach a USB-UART cable from the board to the host PC. Set the UART serial port to

-

After boot sequence, set LD_LIBRARY_PATH env variable.

export OPENBLAS_NUM_THREADS=2 export LD_LIBRARY_PATH=lib/:opencv/arm64/lib/:protobuf/arm64/lib:cblas/arm64/lib

-

Create a folder «» inside the network directory to save the outputs

-

Execute «» file to run inference

- The format for running these example networks is described below:

./<example network>.elf <quantization scheme> <bit width> <img1_path> <img2_path>

- For GoogleNet 6-bit inference with Xilinx quantization scheme execute the following

./googlenet.elf Xilinx 6 images/camel.jpg images/goldfish.JPEG

- The format for running these example networks is described below:

-

Sync after execution

cd / sync umount /mnt

-

Output will be written into text file inside respective output folders.

Build from Source

CHaiDNN can be built using Makefiles OR using SDx IDE. The below steps describe how to build CHaiDNN using Makefiles. For steps to build using SDx IDE, see the instructions in Build using SDx IDE.

Build CHaiDNN Hardware

Please follow the steps to build the design for zcu102 (ZU9 device based board)

-

Please generate a custom platform with 1x and 2x clocks using the steps described here. With Chai-v2, we now have the DSPs operating at twice the frequency of the rest of the core.

-

Go to folder.

-

Set SDx tool environment

- For BASH:

source <SDx Installation Dir>/installs/lin64/SDx/2018.2/settings64.sh

- For CSH

source <SDx Installation Dir>/installs/lin64/SDx/2018.2/settings64.csh

- For BASH:

-

To build the design, run Makefile. (By default this will build 1024 DSP design @ 200/400 MHz)

make ultraclean make

-

After the build is completed, copy the file and other build files (, and directory) inside to directory.

make copy

-

The hardware setup is now ready.

Build CHaiDNN Software

Follow the steps to compile the software stack.

-

Copy to directory. The file can be found in the directory once the HW build is finished. You can skip this step if have already copied the file to the suggested directory.

-

Set the SDx tool environment.

- CSH

source <SDx Installation Dir>/installs/lin64/SDx/2018.2/settings64.csh

- BASH

source <SDx Installation Dir>/installs/lin64/SDx/2018.2/settings64.sh

- CSH

-

Go to the directory. This contains all the files to generate software libraries (.so).

cd <path to CHaiDNN>/software

-

Go to directory, open and update the variable. See example below.

-

Now run the following commands.

make ultraclean make

-

Make will copy all executables to directory and all files to directory.

-

Now, we are set to run inference. Follow the steps mentioned in «»