Резервное копирование postgresql. бэкап базы postgresql. восстановление postgresql

Содержание:

Notes

Since pg_dumpall calls pg_dump internally, some diagnostic messages will refer to pg_dump.

The option can be useful even when your intention is to restore the dump script into a fresh cluster. Use of authorizes the script to drop and re-create the built-in and databases, ensuring that those databases will retain the same properties (for instance, locale and encoding) that they had in the source cluster. Without the option, those databases will retain their existing database-level properties, as well as any pre-existing contents.

Once restored, it is wise to run on each database so the optimizer has useful statistics. You can also run to analyze all databases.

The dump script should not be expected to run completely without errors. In particular, because the script will issue for every role existing in the source cluster, it is certain to get a “role already exists” error for the bootstrap superuser, unless the destination cluster was initialized with a different bootstrap superuser name. This error is harmless and should be ignored. Use of the option is likely to produce additional harmless error messages about non-existent objects, although you can minimize those by adding .

Examples

To dump a database called mydb into a SQL-script file:

$ pg_dump mydb > db.sql

To reload such a script into a (freshly created) database named newdb:

$ psql -d newdb -f db.sql

To dump a database into a custom-format archive file:

$ pg_dump -Fc mydb > db.dump

To dump a database into a directory-format archive:

$ pg_dump -Fd mydb -f dumpdir

To dump a database into a directory-format archive in parallel with 5 worker jobs:

$ pg_dump -Fd mydb -j 5 -f dumpdir

To reload an archive file into a (freshly created) database named newdb:

$ pg_restore -d newdb db.dump

To dump a single table named mytab:

$ pg_dump -t mytab mydb > db.sql

To dump all tables whose names start with emp in the detroit schema, except for the table named employee_log:

$ pg_dump -t 'detroit.emp*' -T detroit.employee_log mydb > db.sql

To dump all schemas whose names start with east or west and end in gsm, excluding any schemas whose names contain the word test:

$ pg_dump -n 'east*gsm' -n 'west*gsm' -N '*test*' mydb > db.sql

The same, using regular expression notation to consolidate the switches:

$ pg_dump -n '(east|west)*gsm' -N '*test*' mydb > db.sql

To dump all database objects except for tables whose names begin with ts_:

$ pg_dump -T 'ts_*' mydb > db.sql

To specify an upper-case or mixed-case name in -t and related switches, you need to double-quote the name; else it will be folded to lower case (see ). But double quotes are special to the shell, so in turn they must be quoted. Thus, to dump a single table with a mixed-case name, you need something like

Description

pg_dump is a utility for

backing up a PostgreSQL database.

It makes consistent backups even if the database is being used

concurrently. pg_dump does not

block other users accessing the database (readers or writers).

Dumps can be output in script or archive file formats. Script

dumps are plain-text files containing the SQL commands required to

reconstruct the database to the state it was in at the time it was

saved. To restore from such a script, feed it to psql. Script

files can be used to reconstruct the database even on other

machines and other architectures; with some modifications, even on

other SQL database products.

The alternative archive file formats must be used with pg_restore to rebuild the database. They

allow pg_restore to be selective

about what is restored, or even to reorder the items prior to being

restored. The archive file formats are designed to be portable

across architectures.

When used with one of the archive file formats and combined with

pg_restore, pg_dump provides a flexible archival and

transfer mechanism. pg_dump can be

used to backup an entire database, then pg_restore can be used to examine the archive

and/or select which parts of the database are to be restored. The

most flexible output file formats are the «custom» format (-Fc) and

the «directory» format (-Fd). They allow for selection and reordering of all

archived items, support parallel restoration, and are compressed by

default. The «directory» format is the

only format that supports parallel dumps.

Notes

If your database cluster has any local additions to the template1 database, be careful to restore the output of pg_dump into a truly empty database; otherwise you are likely to get errors due to duplicate definitions of the added objects. To make an empty database without any local additions, copy from template0 not template1, for example:

CREATE DATABASE foo WITH TEMPLATE template0;

When a data-only dump is chosen and the option --disable-triggers is used, pg_dump emits commands to disable triggers on user tables before inserting the data, and then commands to re-enable them after the data has been inserted. If the restore is stopped in the middle, the system catalogs might be left in the wrong state.

The dump file produced by pg_dump does not contain the statistics used by the optimizer to make query planning decisions. Therefore, it is wise to run ANALYZE after restoring from a dump file to ensure optimal performance; see and for more information. The dump file also does not contain any ALTER DATABASE ... SET commands; these settings are dumped by pg_dumpall, along with database users and other installation-wide settings.

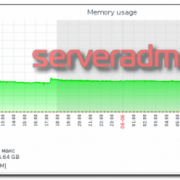

Обновление статистики и реиндексация в postgresql

С бэкапами разобрались, теперь настроим регламентные операции на уровне субд, чтобы поддерживать быстродействие базы данных. Тут особых комментариев не будет, в интернете очень много информации на тему регламентных заданий для баз 1С. Я просто приведу пример того, как это выглядит в postgresql.

Выполняем очистку и анализ базы данных 1С:

# vacuumdb --full --analyze --username postgres --dbname base1c

Реиндексация таблиц базы данных:

# reindexdb --username postgres --dbname base1c

Завернем все это в скрипт с логированием времени выполнения команд:

# cat /root/bin/service-sql.sh

#!/bin/sh # Записываем информацию в лог echo "`date +"%Y-%m-%d_%H-%M-%S"` Start vacuum base1c" >> /var/log/postgresql/service.log # Выполняем очистку и анализ базы данных /usr/bin/vacuumdb --full --analyze --username postgres --dbname base1c echo "`date +"%Y-%m-%d_%H-%M-%S"` End vacuum base1c" >> /var/log/postgresql/service.log sleep 2 echo "`date +"%Y-%m-%d_%H-%M-%S"` Start reindex base1c" >> /var/log/postgresql/service.log # Переиндексирвоать базу /usr/bin/reindexdb --username postgres --dbname base1c echo "`date +"%Y-%m-%d_%H-%M-%S"` End reindex base1c" >> /var/log/postgresql/service.log

Сохраняем скрипт и добавляем в планировщик. Хотя я для удобства сделал еще один скрипт, который объединяет бэкап и обслуживание и уже его добавил в cron:

# cat all-sql.sh

#!/bin/sh /root/bin/backup-sql.sh sleep 2 /root/bin/service-sql.sh

Добавялем в /etc/crontab:

# Бэкап и обслуживание БД 1 3 * * * root /root/bin/all-sql.sh

Проверяем лог файл и наличие бэкапа. Не забывайте делать проверочное регулярное восстановление бд из архива.

Описанные выше операции очистки и переиндексации можно делать в ручном режиме в программе под windows — pgAdmin. Рекомендую ее установить на всякий случай. Достаточно удобно и быстро можно посмотреть информацию или выполнить какие-то операции с базой данных посгрес.

24.1.1. Restoring the Dump

Text files created by pg_dump are intended to be read in by the psql program. The general command form to restore a dump is

psql dbname < dumpfile

where dumpfile is the file output by the pg_dump command. The database dbname will not be created by this command, so you must create it yourself from template0 before executing psql (e.g., with createdb -T template0 dbname). psql supports options similar to pg_dump for specifying the database server to connect to and the user name to use. See the psql reference page for more information. Non-text file dumps are restored using the pg_restore utility.

Before restoring an SQL dump, all the users who own objects or were granted permissions on objects in the dumped database must already exist. If they do not, the restore will fail to recreate the objects with the original ownership and/or permissions. (Sometimes this is what you want, but usually it is not.)

By default, the psql script will continue to execute after an SQL error is encountered. You might wish to run psql with the ON_ERROR_STOP variable set to alter that behavior and have psql exit with an exit status of 3 if an SQL error occurs:

psql --set ON_ERROR_STOP=on dbname < dumpfile

Either way, you will only have a partially restored database. Alternatively, you can specify that the whole dump should be restored as a single transaction, so the restore is either fully completed or fully rolled back. This mode can be specified by passing the -1 or --single-transaction command-line options to psql. When using this mode, be aware that even a minor error can rollback a restore that has already run for many hours. However, that might still be preferable to manually cleaning up a complex database after a partially restored dump.

The ability of pg_dump and psql to write to or read from pipes makes it possible to dump a database directly from one server to another, for example:

pg_dump -h host1 dbname | psql -h host2 dbname

23.1.1. Restoring the dump

The text files created by pg_dump are intended to be read in by

the psql program. The

general command form to restore a dump is

psql dbname < infile

where infile is what you

used as outfile for the

pg_dump command. The

database dbname will not be

created by this command, you must create it yourself from

template0 before executing

psql (e.g., with createdb -T template0 dbname). psql supports options similar to

pg_dump for controlling the

database server location and the user name. See psql’s

reference page for more information.

Not only must the target database already exist before

starting to run the restore, but so must all the users who

own objects in the dumped database or were granted

permissions on the objects. If they do not, then the restore

will fail to recreate the objects with the original ownership

and/or permissions. (Sometimes this is what you want, but

usually it is not.)

Once restored, it is wise to run ANALYZE on each database so the

optimizer has useful statistics. An easy way to do this is to

run vacuumdb -a -z to VACUUM ANALYZE all databases; this is

equivalent to running VACUUM ANALYZE

manually.

The ability of pg_dump

and psql to write to or read

from pipes makes it possible to dump a database directly from

one server to another; for example:

pg_dump -h host1 dbname | psql -h host2 dbname

Examples

To dump a database called into a SQL-script file:

To reload such a script into a (freshly created) database named :

To dump a database into a custom-format archive file:

To dump a database into a directory-format archive:

To dump a database into a directory-format archive in parallel with 5 worker jobs:

To reload an archive file into a (freshly created) database named :

To reload an archive file into the same database it was dumped from, discarding the current contents of that database:

To dump a single table named :

To dump all tables whose names start with in the schema, except for the table named :

To dump all schemas whose names start with or and end in , excluding any schemas whose names contain the word :

The same, using regular expression notation to consolidate the switches:

To dump all database objects except for tables whose names begin with :

To specify an upper-case or mixed-case name in and related switches, you need to double-quote the name; else it will be folded to lower case (see ). But double quotes are special to the shell, so in turn they must be quoted. Thus, to dump a single table with a mixed-case name, you need something like

Заключение

Напоминаю, что данная статья является частью единого цикла статьей про сервер Debian.

Онлайн курсы по Mikrotik

Если у вас есть желание научиться работать с роутерами микротик и стать специалистом в этой области, рекомендую пройти курсы по программе, основанной на информации из официального курса MikroTik Certified Network Associate. Помимо официальной программы, в курсах будут лабораторные работы, в которых вы на практике сможете проверить и закрепить полученные знания. Все подробности на сайте .

Стоимость обучения весьма демократична, хорошая возможность получить новые знания в актуальной на сегодняшний день предметной области. Особенности курсов:

- Знания, ориентированные на практику;

- Реальные ситуации и задачи;

- Лучшее из международных программ.