S3cmd

Содержание:

Managing public access to buckets

Public access is granted to buckets and objects through access control lists (ACLs),

bucket

policies, or both. To help you manage public access to Amazon S3 resources, Amazon

S3 provides

block public access settings. Amazon S3 block public access settings

can override ACLs and bucket policies so that you can enforce uniform limits on

public

access to these resources. You can apply block public access settings to individual

buckets or to all buckets in your account.

To help ensure that all of your Amazon S3 buckets and objects have their public

access blocked, we recommend that you turn on all four settings for block public

access

for your account. These settings block public access for all current and future

buckets.

Before applying these settings, verify that your applications will work correctly

without public access. If you require some level of public access to your buckets

or

objects, for example to host a static website as described at Hosting a static website on Amazon S3, you can customize the

individual settings to suit your storage use cases. For more information, see Using Amazon S3 block public access.

Related services

After you load your data into Amazon S3, you can use it with other AWS services. The

following

are the services you might use most frequently:

-

Amazon Elastic Compute Cloud (Amazon EC2) – This service provides virtual

compute resources in the cloud. For more information, see the Amazon EC2 product details page. -

Amazon EMR – This service enables businesses,

researchers, data analysts, and developers to easily and cost-effectively

process vast amounts of data. It uses a hosted Hadoop framework running on the

web-scale infrastructure of Amazon EC2 and Amazon S3. For more information, see

the Amazon EMR product details

page. -

AWS Snowball – This service accelerates transferring

large amounts of data into and out of AWS using physical storage devices,

bypassing the internet. Each AWS Snowball device type can transport data at

faster-than internet speeds. This transport is done by shipping the data in the

devices through a regional carrier. For more information, see the AWS Snowball product details page.

Amazon S3 features

This section describes important Amazon S3 features.

Topics

Storage classes

Amazon S3 offers a range of storage classes designed for different use cases. These

include Amazon S3 STANDARD for general-purpose storage of frequently accessed

data, Amazon S3

STANDARD_IA for long-lived, but less frequently accessed data, and S3 Glacier

for

long-term archive.

For more information, see Amazon S3 storage classes.

Bucket policies

Bucket policies provide centralized access control to buckets and objects based on

a variety of conditions, including Amazon S3 operations, requesters, resources,

and

aspects of the request (for example, IP address). The policies are expressed in

the

access policy language and enable centralized

management of permissions. The permissions attached to a bucket apply to all of

the

objects in that bucket.

Both individuals and companies can use bucket policies. When companies register

with Amazon S3, they create an account. Thereafter,

the company becomes synonymous with the account. Accounts are financially

responsible for the AWS resources that they (and their employees) create. Accounts

have the power to grant bucket policy permissions and assign employees permissions

based on a variety of conditions. For example, an account could create a policy

that

gives a user write access:

-

To a particular S3 bucket

-

From an account’s corporate network

-

During business hours

An account can grant one user limited read and write access, but allow another to

create and delete buckets also. An account could allow several field offices to

store their daily reports in a single bucket. It could allow each office to write

only to a certain set of names (for example, «Nevada/*» or «Utah/*») and only

from

the office’s IP address range.

Unlike access control lists (described later), which can add (grant) permissions

only on individual objects, policies can either add or deny permissions across

all

(or a subset) of objects within a bucket. With one request, an account can set

the

permissions of any number of objects in a bucket. An account can use wildcards

(similar to regular expression operators) on Amazon Resource Names (ARNs) and

other

values. The account could then control access to groups of objects that begin

with a

common or end with a given extension, such as .html.

Only the bucket owner is allowed to associate a policy with a bucket. Policies

(written in the access policy language) allow or

deny requests based on the following:

-

Amazon S3 bucket operations (such as ), and object

operations (such as , or ) -

Requester

-

Conditions specified in the policy

An account can control access based on specific Amazon S3 operations, such as

, ,

, or .

The conditions can be such things as IP addresses, IP address ranges in CIDR

notation, dates, user agents, HTTP referrer, and transports (HTTP and HTTPS).

For more information, see Using Bucket Policies and User Policies.

AWS identity and access

management

You can use AWS Identity and Access Management (IAM) to manage access to your Amazon

S3 resources.

For example, you can use IAM with Amazon S3 to control

the type of access a user or group of users has to

specific parts of an Amazon S3 bucket your AWS account owns.

For more information about IAM, see the following:

Access control lists

You can control access to each of your buckets and objects using an access control

list (ACL). For more information, see Managing Access with ACLs.

Versioning

You can use versioning to keep multiple versions of an object

in the same bucket. For more information, see Object Versioning.

Operations

Following are the most common operations that you’ll run through the

API.

Common operations

-

Create a bucket – Create and name

your own bucket in which to store your objects. -

Write an object – Store data by

creating or overwriting an object. When you write an object, you specify a

unique key in the namespace of your bucket. This is also a good time to

specify any access control you want on the object. -

Read an object – Read data back. You

can download the data via HTTP or BitTorrent. -

Delete an object – Delete some of

your data. -

List keys – List the keys contained

in one of your buckets. You can filter the key list based on a

prefix.

These operations and all other functionality are described in detail throughout

this guide.

Creating a bucket

Amazon S3 provides APIs for creating and managing buckets. By default, you can create

up to

100 buckets in each of your AWS accounts. If you need more buckets, you can increase

your account bucket limit to a maximum of 1,000 buckets by submitting a service

limit

increase. To learn how to submit a bucket limit increase, see AWS Service Limits in the AWS General

Reference. You can store any number of objects in a bucket.

When you create a bucket, you provide a name and the AWS Region where you want to

create the bucket. For information about naming buckets, see .

You can use any of the methods listed below to create a bucket. For examples, see

Examples of creating a

bucket.

Amazon S3 console

You can create a bucket in the Amazon S3 console. For more information, see Creating a bucket in the Amazon Simple Storage Service Console User Guide

REST API

Creating a bucket using the REST API can be cumbersome because it requires you to

write code to authenticate your requests. For more information, see PUT Bucket in the

Amazon Simple Storage Service API Reference. We recommend that you use the AWS Management Console or

AWS SDKs instead.

AWS SDK

When you use the AWS SDKs to create a bucket, you first create a client and then

use the client to send a request to create a bucket. If you don’t specify a Region

when you create a client or a bucket, Amazon S3 uses US East (N. Virginia), the

default

Region. You can also specify a specific Region. For a list of available AWS Regions,

see Regions and

Endpoints in the AWS General Reference. For more

information about enabling or disabling an AWS Region, see Managing AWS

Regions in the AWS General Reference.

As a best practice, you should create your client and bucket in the same

Region. If your Region launched after March 20,

2019, your client and bucket must be in the same Region. However,

you can use a client in the US East (N. Virginia) Region to create a bucket in

any

Region that launched before March 20, 2019.

For more information, see .

Creating a client

When you create the client, you should specify an AWS Region, to create the client

in.

If you don’t specify a Region, Amazon S3 creates the client in US East (N. Virginia)

by

default Region. To create a client to access a dual-stack endpoint, you must

specify an AWS Region,. For more information, see .

When you create a client, the Region maps to the Region-specific endpoint. The

client uses this endpoint to communicate with Amazon S3:

For example, if you create a client by specifying the eu-west-1 Region, it maps to

the following Region-specific endpoint:

Creating a bucket

If you don’t specify a Region when you create a bucket, Amazon S3 creates the bucket

in the

US East (N. Virginia) Region. Therefore, if you want to create a bucket in a

specific Region, you must specify the Region when you create the bucket.

Buckets created after September 30, 2020, will support only virtual hosted-style requests.

Path-style requests will continue to be supported for buckets created on or before

this date.

For more information,

see

Amazon S3 Path Deprecation Plan – The Rest of the Story.

About permissions

You can use your AWS account root credentials to create a bucket and perform any

other Amazon S3 operation. However, AWS recommends not using the root credentials

of your

AWS account to make requests such as to create a bucket. Instead, create an IAM

user, and grant that user full access (users by default have no permissions).

We

refer to these users as administrator users. You can use the administrator user

credentials, instead of the root credentials of your account, to interact with

AWS

and perform tasks, such as create a bucket, create users, and grant them

permissions.

For more information, see Root Account

Credentials vs. IAM User Credentials in the AWS

General Reference and IAM Best Practices in the IAM User Guide.

The AWS account that creates a resource owns that resource. For example, if you

create an IAM user in your AWS account and grant the user permission to create

a

bucket, the user can create a bucket. But the user does not own the bucket; the

AWS

account to which the user belongs owns the bucket. The user will need additional

permission from the resource owner to perform any other bucket operations. For

more

information about managing permissions for your Amazon S3 resources, see Identity and access management in Amazon S3.

Advantages of using Amazon S3

Amazon S3 is intentionally built with a minimal feature set that focuses on simplicity

and

robustness. Following are some of the advantages of using Amazon S3:

-

Creating buckets – Create and name a bucket that

stores data. Buckets are the fundamental containers in Amazon S3 for data

storage. -

Storing data – Store an infinite amount of data in a

bucket. Upload as many objects as you like into an Amazon S3 bucket. Each object

can

contain up to 5 TB of data. Each object is stored and retrieved using a unique

developer-assigned key. -

Downloading data – Download your data or enable

others to do so. Download your data anytime you like, or allow others to do the

same. -

Permissions – Grant or deny access to others who want

to upload or download data into your Amazon S3 bucket. Grant upload and download

permissions to three types of users. Authentication mechanisms can help keep

data secure from unauthorized access. -

Standard interfaces – Use standards-based REST and

SOAP interfaces designed to work with any internet-development toolkit.Note

SOAP support over HTTP is deprecated, but it is still available over HTTPS.

New Amazon S3 features will not be supported for SOAP. We recommend that you use

either the REST API or the AWS SDKs.

CloudFront – настройка CDN и привязка HTTPS

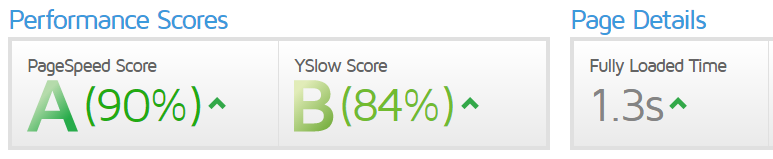

CloudFront позволит нам не только привязать к сайту бесплатный SSL-сертификат, но и позволяет использовать для доступа к сайту протокол HTTP/2, который во всех тестах показывает производительность намного выше, чем HTTP. Кроме того, большая распределенная сеть CDN CloudFront еще более увеличивает скорость доступа к нашему сайту из разных точек мира.

-

- В разделе Networking & Content Delivery выберите CloudFront.

- Нажмите на кнопку Create Distribution, а затем в разделе Web distribution нажмите кнопку Get Started

- Я задал такие настройки (остальные можно оставить по-умолчанию):

Origin: длинный URL сайта, предоставляемый S3.Viewer Protocol Policy: Redirect HTTP to HTTPSCompress Objects Automatically: YesCNAMEs: www.mystaticsite77.ruCustom SSL Certificate: выберите созданный ранее SSL сертификат.Default Root Object: index.html

Затем нажмите Create Distribution

Примечание: По умолчанию CloudFront покажет список источников и предложит использовать S3 Bucket. Вам нужно будет сменить S3 Bucket, выбрав вместо него S3 URL (Endpoint S3 bucket). К примеру, http://www.mystaticsite77.ru.s3-website-us-east-1.amazonaws.com

Генерация данных в сети CloudFront займет довольно продолжительное время – от 30 минут до нескольких часов.

Запрос бесплатного SSL сертификата через Certificate Manager

Конечно, в общем случае вопрос установки SSL сертификата для статического сайта весьма спорен. Мы в большей степени будем его использовать в целях демонстрации.

- В разделе Security, Identity & Compliance откройте секцию Certificate Manager

- Нажмите кнопку “Request a certificate” и укажите имя своего домена в *.mystaticsite77.ru и mystaticsite77.ru, а затем нажмите кнопку “Review and request“.

- Подтвердите запрос сертификата и AWS попытается отправить вам электронное письмо для подтверждения запроса. Нажав на стрелку, можно отобразить список email, на которые должен быть отправлен запрос.Это, пожалуй, самый «трудный» шаг, т.к. у вас должен быть доступ к любому из ящиков в списке. Как правило, это ящик, указанный во WHOIS домена (я использую адрес privacyguardian.org для защиты данных WHOIS).

- После получения письма от AWS, нажмите на ссылку в письме для подтверждения запроса на выпуск SSL сертификата. Имейте в виду, что AWS отправит два письма (для *.mystaticsite77.ru и mystaticsite77.ru ), нужно подтвердить их оба.

Accessing a bucket

You can access your bucket using the Amazon S3 console. Using the console UI, you

can

perform almost all bucket operations without having to write any code.

If you access a bucket programmatically, note that Amazon S3 supports RESTful architecture

in which your buckets and objects are resources, each with a resource URI that

uniquely

identifies the resource.

Amazon S3 supports both virtual-hosted–style and path-style URLs to access a bucket.

Because buckets can be accessed using path-style and virtual-hosted–style URLs,

we

recommend that you create buckets with DNS-compliant bucket names. For more information,

see Bucket restrictions and limitations.

Note

Virtual hosted style and path-style requests use the S3 dot Region endpoint

structure (), for example,

. However, some older

Amazon S3 Regions also support S3 dash Region endpoints , for

example, . If your bucket is

in one of these Regions, you might see endpoints in your

server access logs or CloudTrail logs. We recommend that you do not use this

endpoint structure in your requests.

Virtual hosted style access

In a virtual-hosted–style request, the bucket name is part of the domain

name in the URL.

Amazon S3 virtual hosted style URLs follow the format shown below.

In this example, is the bucket name, US West (Oregon) is the Region, and is the key name:

For more information about virtual hosted style access, see .

Path-style access

In Amazon S3, path-style URLs follow the format shown below.

For example, if you create a bucket named in the US West (Oregon) Region,

and you want to access the object in that bucket, you can use the

following path-style URL:

For more information, see .

Important

Buckets created after September 30, 2020, will support only virtual hosted-style requests.

Path-style requests will continue to be supported for buckets created on or before

this date.

For more information,

see

Amazon S3 Path Deprecation Plan – The Rest of the Story.

Accessing an S3 bucket over IPv6

Amazon S3 has a set of dual-stack endpoints, which support requests to S3 buckets

over

both Internet Protocol version 6 (IPv6) and IPv4. For more information, see Making requests over IPv6.

Accessing a bucket

through an S3 access point

In addition to accessing a bucket directly, you can access a bucket through an S3

access point. For more information about S3 access points, see Managing data access with Amazon S3 access points .

S3 access points only support virtual-host-style addressing. To address a bucket

through an access point, use this format:

Note

-

If your access point name includes dash (-) characters, include the dashes

in the URL and insert another dash before the account ID. For example, to

use an access point named owned by account

in Region , the

appropriate URL would be

. -

S3 access points don’t support access by HTTP, only secure access by

HTTPS.

Accessing a Bucket using

S3://

Some AWS services require specifying an Amazon S3 bucket using

. The correct format is shown below. Be aware that when

using this format, the bucket name does not include the region.

For example, using the sample bucket described in the earlier path-style

section:

Редирект домена без WWW нвWWW

Итак, мы почти закончили, осталось озаботиться поисковой оптимизацией, чтобы поисковые системы видели только один сайт, настроив редирект с https://mystaticsite77.ru на WWW адрес https://www.mystaticsite77.ru

- Для этого создадим еще одну корзину (bucket) S3 с именем mystaticsite77.ru, также включаем для нее поддержку размещения статического сайта, однако выбираем опцию “Redirect Requests” и указываем имя первой корзины (www.mystaticsite77.ru)

- В CloudFront создадим новый пакет со следующими настройками:Origin: адрес второго сайта S3 (endpoint URL)CNAMEs: mystaticsite77.ruCustom SSL Certificate: выберите ваш сертификат.Нажмите кнопку “Create Distribution“

- Затем в Route 53 создадим еще одну запись для mystaticsite77.ru типа A – IPv4 с алиасом, указывающим на второй пакет CloudFront. Сохраните изменения.

Дождитесь обновления, и убедитесь, что 301 работает редирект с адреса сайта без www на www.

Bucket configuration options

Amazon S3 supports various options for you to configure your bucket. For example,

you can

configure your bucket for website hosting, add configuration to manage lifecycle

of

objects in the bucket, and configure the bucket to log all access to the bucket.

Amazon S3

supports subresources for you to store and manage the bucket configuration information.

You can use the Amazon S3 API to create and manage these subresources. However,

you can also

use the console or the AWS SDKs.

Note

There are also object-level configurations. For example, you can configure

object-level permissions by configuring an access control list (ACL) specific

to

that object.

These are referred to as subresources because they exist in the context of a specific

bucket or object. The following table lists subresources that enable you to manage

bucket-specific configurations.

| Subresource | Description |

|---|---|

|

cors (cross-origin resource sharing) |

You can configure your bucket to allow cross-origin For more information, see Enabling Cross-Origin Resource Sharing. |

|

event notification |

You can enable your bucket to send you notifications of specified For more information, see Configuring Amazon S3 event notifications. |

| lifecycle |

You can define lifecycle rules for objects in your bucket that For more information, see Object Lifecycle |

|

location |

When you create a bucket, you specify the AWS Region where you |

|

logging |

Logging enables you to track requests for access to your bucket. For more information, see Amazon S3 server access logging. |

|

object locking |

To use S3 Object Lock, you must enable it for a bucket. You can For more information, see . |

|

policy and ACL (access |

All your resources (such as buckets and objects) are private by For more information, see Identity and access management in Amazon S3. |

|

replication |

Replication is the automatic, asynchronous copying of objects |

|

requestPayment |

By default, the AWS account that creates the bucket (the bucket For more information, see Requester Pays buckets. |

|

tagging |

You can add cost allocation tags to your bucket to categorize and For more information, see Billing and usage reporting for S3 buckets. |

|

transfer acceleration |

Transfer Acceleration enables fast, easy, and secure transfers of files For more information, see Amazon S3 Transfer Acceleration. |

| versioning |

Versioning helps you recover accidental overwrites and deletes. We recommend versioning as a best practice to recover objects from For more information, see Using versioning. |

| website |

You can configure your bucket for static website hosting. Amazon S3 For more information, see Hosting a Static Website |